H.265 Vulkan Video Encoding (Scary)

A few months ago I was given the task to integrate an open source Vulkan video encoder into our renderer. I had never touched Vulkan before (I vaguely knew to feel fear towards it) and knew nothing about video compression, namely the H.264 and H.265/HEVC codecs.

This is part 2 to the adventure I started writing about.

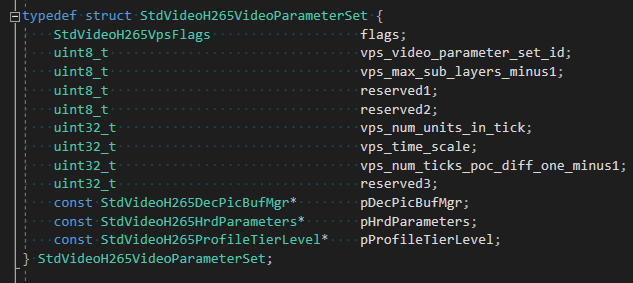

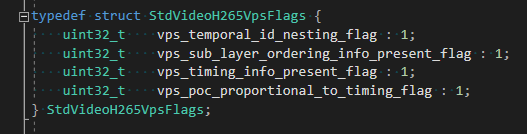

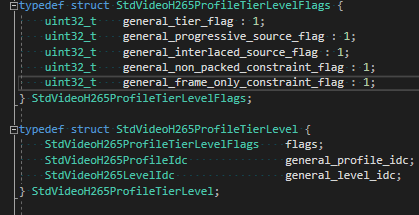

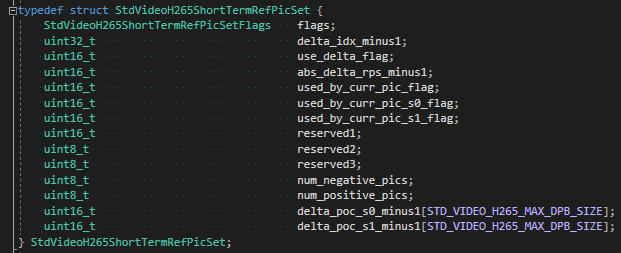

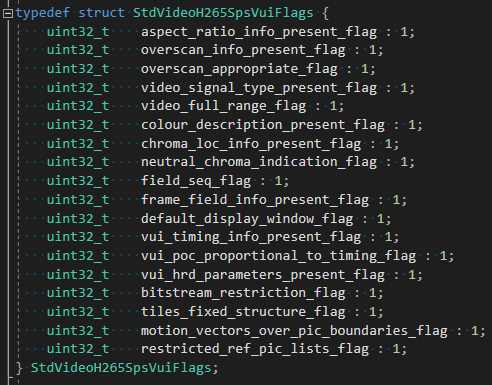

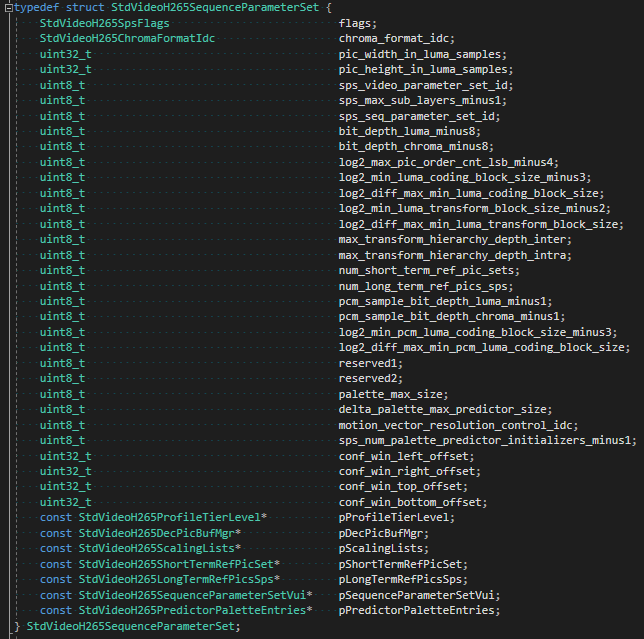

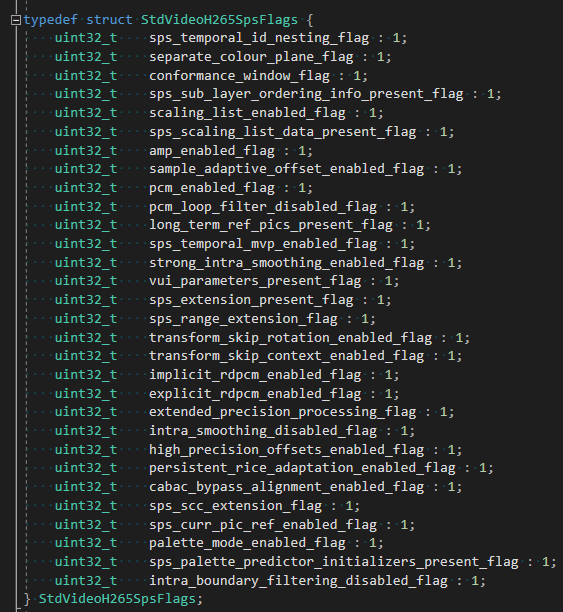

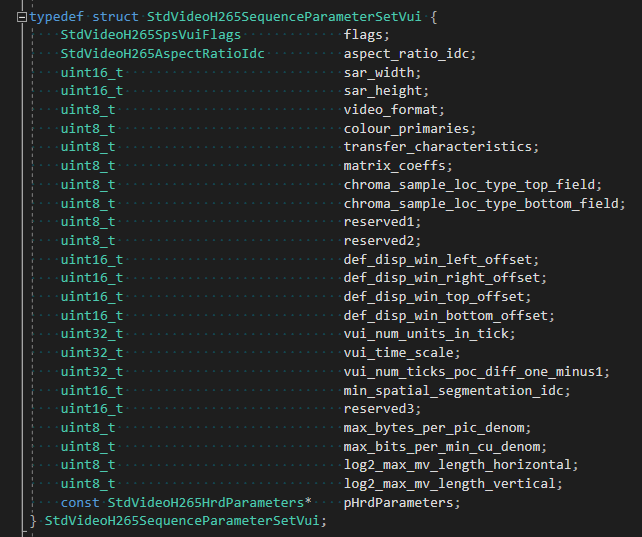

At this point I had managed to integrate pyroenc and get the Base, Main and High profiles for H.264 working. The next frontier was getting H.265 to work. I started implementing it on my own - pyroenc hadn’t started working on it yet. So I took a look at some of the H.265 parameters that would need to be set. The experience was cacophonous:

This doesn't even cover all of it.

The First Impasse

I naiveley started out by zero initializing the session parameters (VPS, SPS, PPS, and VUI) and then filling out the parameters that I recognized from H.264 and others that “seemed important”. I very quickly got to the same impasse I did with H.264. vkGetEncodedVideoSessionParametersKHRwas returning VK_OUT_OF_HOST_MEMORY even though I had all the RAM in the world. As a reminder, vkGetEncodedVideoSessionParametersKHR takes a video session parameters object which contains your desired parameters and outputs the encoded parameters in a buffer you give it.

I had the hindsight of having already suffered the same fate with H.264, the experience of which I’ve outlined in my previous article. I knew the most probable cause was a problematic session parameter value. I spent a few weeks trying to figure it out on my own, which is a significant amount of time to spend on one bug. I developed different debugging strategies to eke out the faulty parameter. All in all I ended up learning more about the H.265 codec but I could not resolve my issue, and it had been weeks. Was the issue even with a fautly parameter?

At some point I decided to file a bug on the Nvidia forum. “It’s not me, it’s you.” This led to an answer that gave me some breadcrumbs to follow, and renewed hope:

Hi Nahalie, VK_ERROR_OUT_OF_HOST_MEMORY indicates that our internal driver validation thinks that the specified VPS values are invalid. Could you provide a minimal sample so we could test with our debug drivers what triggers the issue? An API trace generated by the Vulkan API trace layer would also be appreciated.

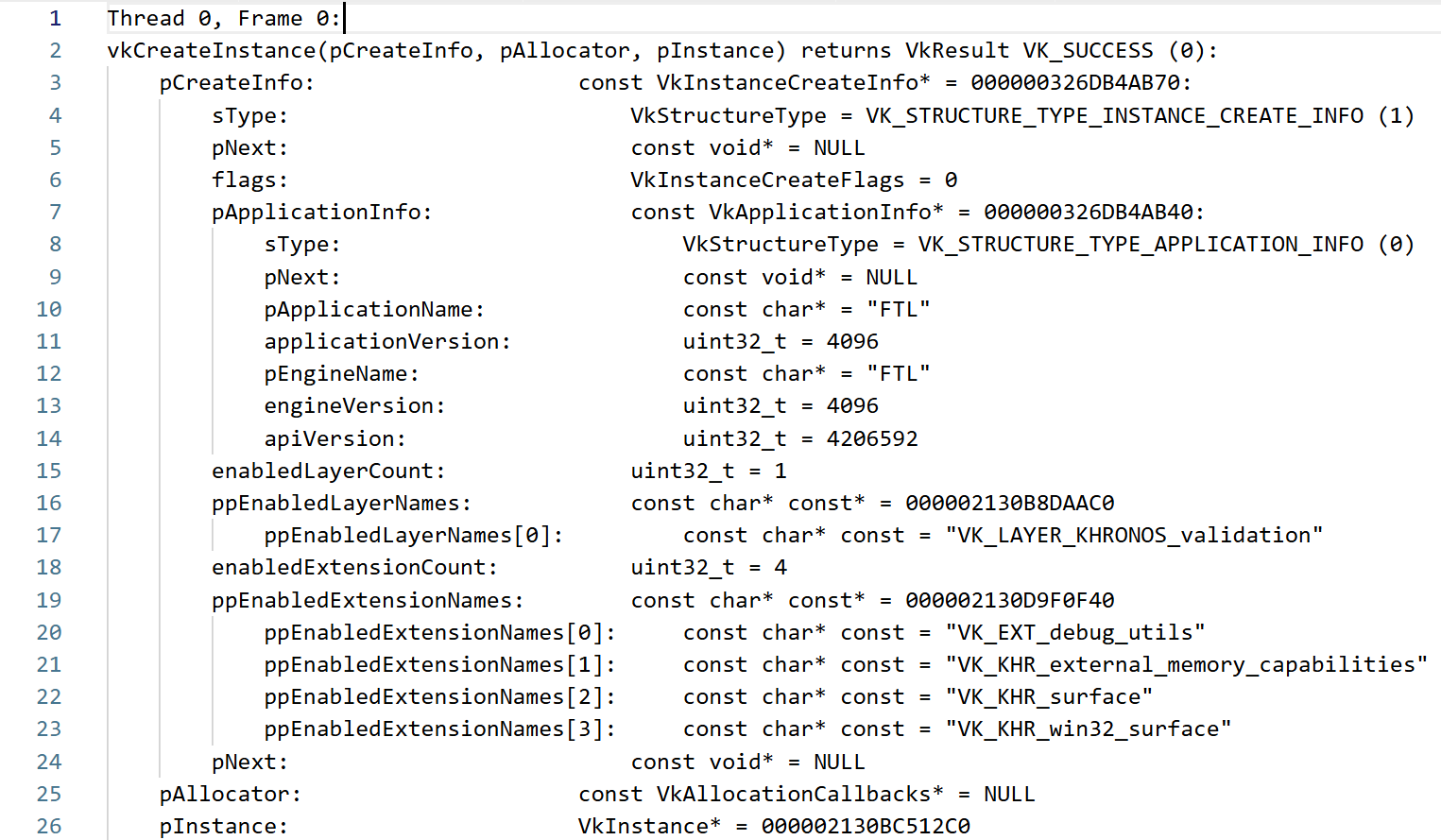

Ok so it wasn’t them, it was me. This was enough hope for me to move on. There was something wrong with my set parameters. I also learned what a Vulkan API trace was:

I posted my API trace in the bug report, but it took them a while. Where else could I scavenge for help? I learned about the Vulkan discord. So I posted my API trace in the Vulkan discord and begged for help. That’s where I got my answer. It was because I hadn’t set the parameter pDecPicBufMgr, its default value was nullptr, and that was not allowed. The decoded picture buffer, even though it has “decoded” in its name, is important in the encoding process. It stores the decoded reference pictures which are then used to make P frames (or the “delta” frames). So yay!

The Second Impasse

WebCodecs Fail

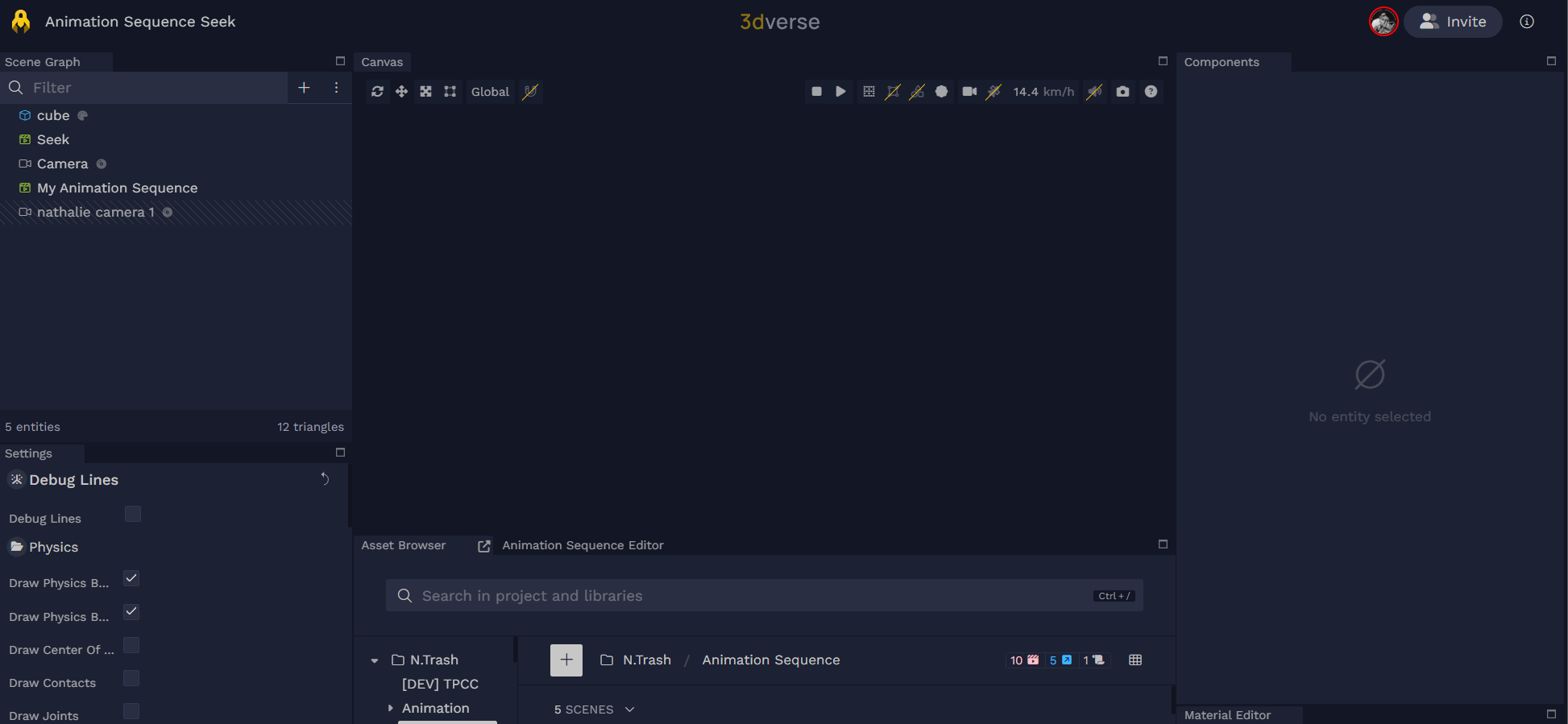

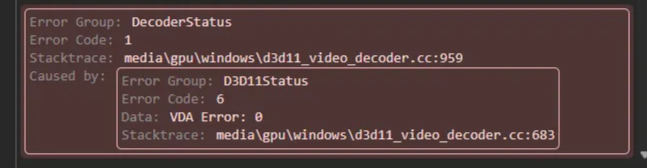

At this point I was able to encode frames without there being any errors returned by any functions. The renderer was encoding the frames and then sending them over to the 3dverse web editor to decode them. The web editor uses the WebCodecs API to decode the streamed frames.

Could it be so simple? No, unfortunately I was getting a black screen on the decoding end and an error in the web console. My frames weren’t being decoded.

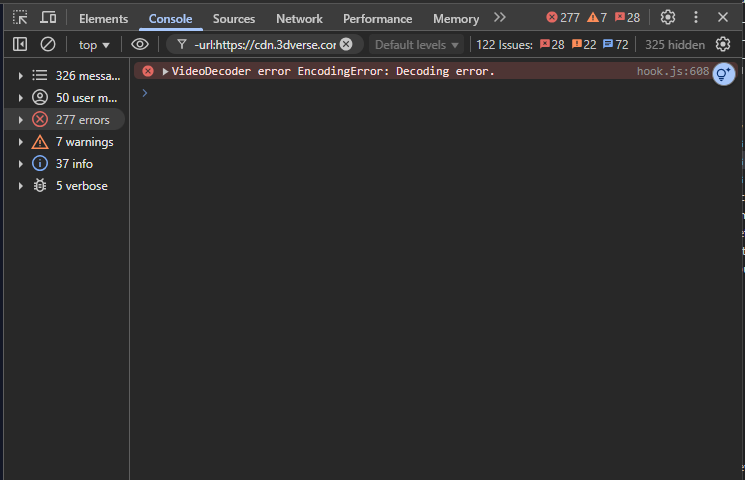

This is the laconic error I was getting in the browser. The bug didn’t reveal more of itself other than as a “decoding error”.

This error simply indicated that the decoding failed with WebCodecs API. But why??? I didn’t have any more info to go on. Did decoding also fail with ffmpeg?

Ffmpeg Success

To test whether the encoding was a success, I could encode my frames and output them sequentially in one file, and then use ffmpeg to play back the file.

// See if any issues arise using ffprobe

ffprobe output.h265

// Play it with ffplay. Does it seem correct?

ffplay output.h265

When I did this, everything played correctly and ffprobe didn’t raise any errors. But I was still getting that mysterious black screen and error with WebCodecs in the browser. I posted again on Vulkan Discord, but I didn’t get an answer this time.

Infuriating. I had one last idea.

Strategy

I could try to generate encoded frames using the official NVIDIA’s vk_video_samples and then decode them using WebCodecs API with a simple Javascript program. This would confirm whether the issue was with Vulkan Video or whether the issue lied with my implementation. So that’s exactly what I did.

Success. I saw the video encoded by vk_video_samples and decoded by WebCodecs play in full.

This meant yet again the issue with my implementation probably lied within the depths of the parameter sets. This time however, the parameter sets were set correctly for ffmpeg to decode but not for WebCodecs to decode.

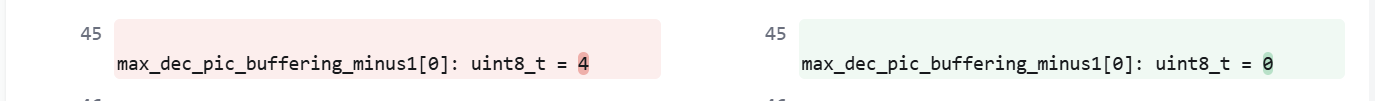

My last idea to make some sense of this was to create two Vulkan API traces, one using vk_video_samples (the successful one) and one using my implementation (the failing one). Then I could compare, i.e. do a diff on, each parameter and see exactly where the discrepancies were. Those discrepancies could then point me to the wrongly set parameter.

There were a good amount of differences in both API traces. For each parameter that was set differently, I tried to gain an understanding of what that parameter was, and whether I had given it an unacceptable value. I tried finnicking with all the different parameters. Nothing was working. I was about to give up. I double checked everything and then found the culprit.

WebCodecs Success

The culprit parameter was:

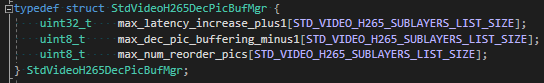

max_dec_pic_buffering_minus1 . This was a very sneaky parameter hiding deep in the SPS.

max_dec_pic_buffering_minus1[0] was set to 4 by vulkan_video_samples. Interesting. Without blindly copying vk_video_samples, I researched the parameter.

max_dec_pic_buffering_minus1 specifies the maximum number of decoded pictures that can be stored in the decoded picture buffer (DPB). It’s an array so that you may specify the DPB size for each potential sublayer. In my case I was only using one reference frame to make P frames, and I didn’t have any sublayers. In the DPB I needed space for my reference frame and for my current frame being encoded, i.e. the reconstructed image. My DPB held the frame n and n-1, so the size of my DPB was 2. When I did this:

// TODO: For B-frames, we might need 3.

constexpr uint32_t DPBSize = 2;

StdVideoH265DecPicBufMgr dec_pic_buf_mgr = {};

dec_pic_buf_mgr.max_dec_pic_buffering_minus1[0] = DPBSize - 1;

sps.pDecPicBufMgr = &dec_pic_buf_mgr;

Everything worked. On the 3dverse editor end, my frames were being decoded!!

It turns out ffmpeg didn’t need max_dec_pic_buffering_minus1 to figure out what the size of the DPB should be (as there are plenty of other ways to figure that out through other parameters and the slice headers) but the WebCodecs API did. Wow… the whims of video compression. But hallelujah, I got H.264 and H.265 to work.