Server-Side Rendering for XR

Streaming real-time XR to the web sounds great, until latency ruins the experience.

In this post, we explain how we made server-side rendering not just usable, but comfortable in XR, using a few clever tricks to smooth out the delay and keep everything feeling grounded, even when the network isn’t.

At 3dverse, we’re building a technology that streams real-time 3D content directly to the web. Instead of requiring powerful local hardware or heavy downloads, our approach renders scenes on the server and streams the resulting frames to the client, just like video, but interactive. This enables instant access, secure sharing, and seamless collaboration across devices, all within a browser.

In a few words, the client simply requests to load a 3D scene and sends the position and orientation of the desired point of view. The server then does its best to render the scene from that point of view as quickly as possible. Everything is done in real time, allowing users to navigate remotely through a 3D scene alongside collaborators who are editing it.

At some point, we aimed to extend our technology to support VR/AR experiences, allowing XR devices to interact with realistic scenes in real time without requiring local processing power.

Our first AR device was a (R.I.P.) HoloLens, for which we developed a native application implementing our streaming protocol. Later, WebXR came into play, and since we had been web-based from the beginning, it became our new playground.

Our XR applications consist of two main components:

- An input sender that captures and transmits the headset or smartphone's position and orientation to the server

- A decoder that decompresses and displays the server-rendered frames

This setup provides:

- Fast loading (slow load times are particularly frustrating in XR experiences)

- Improved image quality

- Cross-device collaboration, including with non-XR users

- An equal experience regardless of the device

You might wonder - how it can be bearable to stream an entire point of view (potentially stereoscopic) in real time. Even slight latency can cause motion sickness in VR headsets or break the illusion when rendering objects in AR.

In our early tests, we drew each frame as it was received from the server, and, as you might expect, the result was terrible. Every frame came with a delay and didn’t match the current position and orientation of the headset. Even the slightest head movement created a disconnect: your body was present while your eyes watched the past. Using a VR headset resulted in immediate motion sickness, while with a mobile phone in AR mode, the scene failed to maintain its position.

Latency is critical, so it must be minimized at all costs. To reduce network usage, frames must be compressed to lower the payload between client and server. This improves network latency but requires the client to decompress the data, adding processing time and introducing further delay.

In the end, pure network latency cannot be eliminated entirely, so we had to find a solution to compensate for it.

Illusion of stability

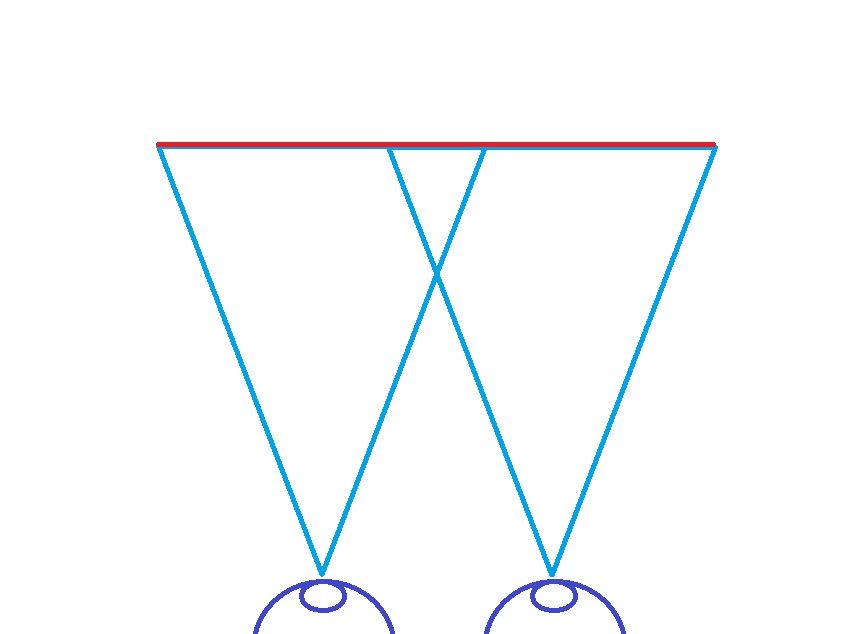

Since the network latency cannot be reduced, we will create the illusion that the latency does not exist and attempt to trick the eyes. To do that, instead of rendering the streaming content directly on the screen, we draw it on a plane:

- This plane will take the whole viewport of the device and act as some kind of TV screen.

- Between two frames received by the remote server, the plane stays at the same place, creating an anchored viewpoint.

- When a new frame is received, the plane moves to the most appropriate position to follow the device’s movement. This helps smooth the motion and hide small delays between input and frame arrival.

The realization

- For each XR frame produced by the device, each eye transformation is projected to the rendering remote server space and sent to it.

- On each frame produced by the server, metadata is attached to the encoded frame and contains the transformation of the cameras in the global space of the rendering remote server.

- When a frame is received, it is queued for decoding while the same metadata is attached to the request.

- When the frame is decoded, the camera transforms, attaches to the metadata, and is projected in the XR device space to determine the point of view of the XR device that was used when the frame was requested.

- These projected transforms in the XR device space serve as relative positions and orientations to place and orient the plane. The plane is placed at a fixed distance from the camera position determined by the frame metadata, creating the illusion that this point of view is stable at this position.

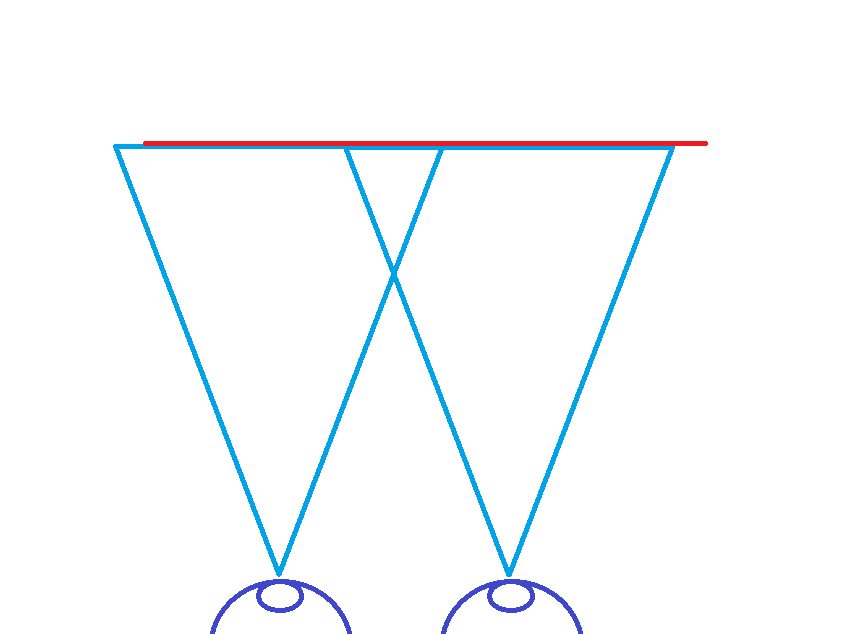

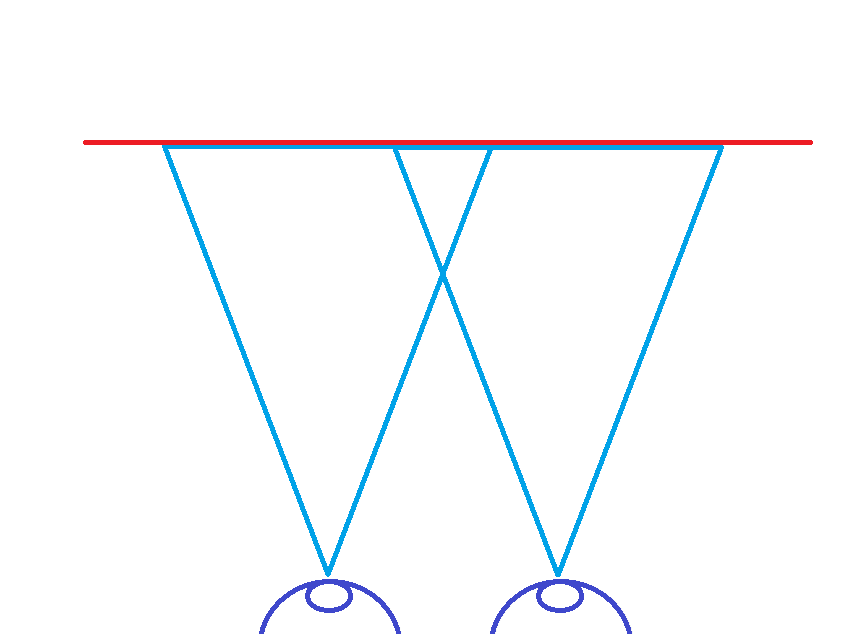

Overscan

With this technique, the frame looks more stable (maybe too stable) because the edges of the plane are visible while moving between two remote frames.

The faster you move, the bigger these empty spaces will be. To address this, we will make the plane bigger than the viewport, with a given factor, adding a padding effect to the plane to minimize the empty space while strafing. That means:

- Rendering a larger field of view from the server.

- Upscaling the plane size to match.

- Widening the client’s projection frustum accordingly.

This strategy is called overscan.

To "reframe" the original image into a larger one, we expand the field of view (FOV) of the projection used by the server to render the scene. Let’s define an overscan factor, such as 1.2 (meaning 20% extra in each direction). The new FOV becomes:

Then the plane dimensions are simply multiplied by this factor.

The overscan buffer gives you "wiggle room". Even if the camera moves after the frame was rendered, the system can reproject the frame onto a plane with a corrected camera transform—and it will still appear valid, because the larger FOV and resolution mean the visible region still falls within the bounds of the frame.

It’s a visual trick, but a powerful one: it lets users experience near-zero-latency input motion while frames continue to arrive asynchronously, but comes with some trade-offs:

- Increased bandwidth and memory usage: Larger frames consume more resources.

- Upscaling GPU cost: More pixels to push through shaders.

What’s next?

We’re still working on improving the experience, and we have a few ideas in mind:

- Dynamic overscan factor: Adjust the overscan factor based on the current network latency. If the latency is low, we can reduce the overscan factor to save bandwidth and processing power. If the latency is high, we can increase it to maintain a smooth experience.

- Movement prediction: Use the last known position and orientation of the headset to predict where it will be when the next frame arrives. This can help reduce the perceived latency and make the experience feel more responsive.

- Perspective correction: Between two frames, the plane is not moving, but the camera is. This can create a disconnect between the realworld and the perspective of the frame drawn on the plane. We can use the camera transform to correct the perspective of the frame, making it look more natural and less jarring.

Conclusion

Server-side rendering for XR is a challenging task, but with the right techniques, it can be made comfortable and enjoyable. By creating the illusion of stability and using overscan to fill in the gaps, we can provide a smooth and immersive experience for users.